2023 Robotics Student Fellows

Research Overview

Traditional autonomous robotics research focuses more on isolated tasks but not combining different sections in the system as a whole for co-design optimization. This underscores the importance of co-designing complex systems, such as autonomous vehicles, using multi-objective optimization strategies. During my fellowship program at Prof. Emilio Frazzoli’s Group, I have been working on a benchmarking subtask of the co-design project under the supervision of Dejan Milojevic.

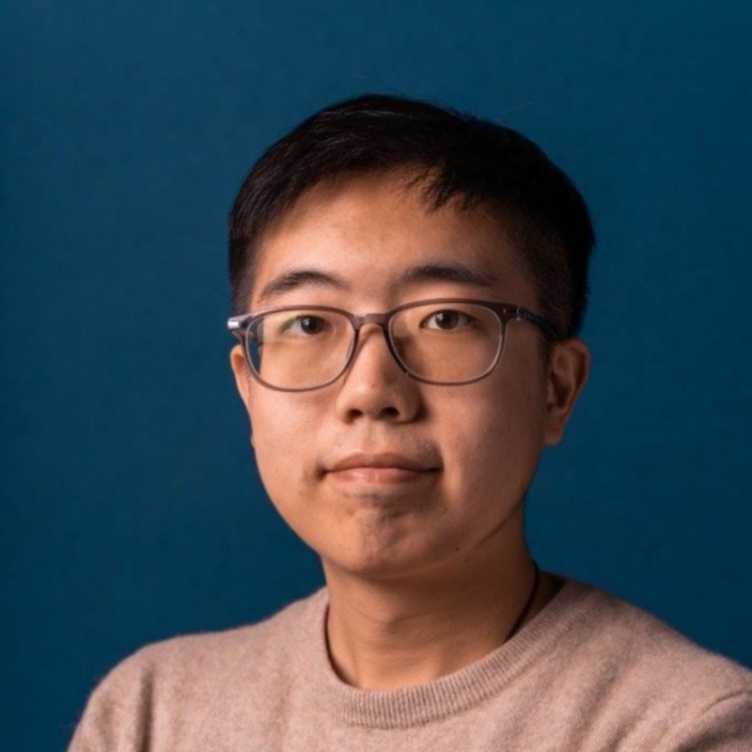

To achieve autonomous driving, diverse sensors (e.g., cameras and Lidars) and deep learning-based object detection models are utilized to perceive road environments. Each sensor and model has its unique strengths and limitations that affect the systems’ performance in various scenarios. To analyze the models, their detection results have been firstly generated on the NuScenes validation dataset. Then, incorporating many features from the detection (like object distance to the sensor, bounding box size, etc.), I leveraged both variational and exact Gaussian Process to assess the detection models’ performance by illustrating the results’ true positive, false negative, and false positive rates along with their corresponding uncertainties. Furthermore, we are also working on enhancing the GP estimations’ visualizations and integrating them into an interactive GUI to facilitate more convenient analysis for future co-design tasks.

"My time at RSF was truly remarkable, allowing me to delve into the SRL team and expand not just my robotics expertise, particularly in soft robotics, but also refine my abilities in teamwork, diligence, problem-solving, and collaboration. My heartfelt appreciation goes to my mentors and supervisor for their invaluable guidance during these past two months."

Research Overview

Soft robotics is a unique branch of robotics that capitalizes on the flexibility of robots, enabling them to handle tasks that traditional rigid robots can't. However, the pliable nature of these robots brings complexity due to the continuous properties of their materials, making it tough to model and control their movements accurately. Soft robotics also explores the use of flexible sensors and actuators to expand their capabilities.

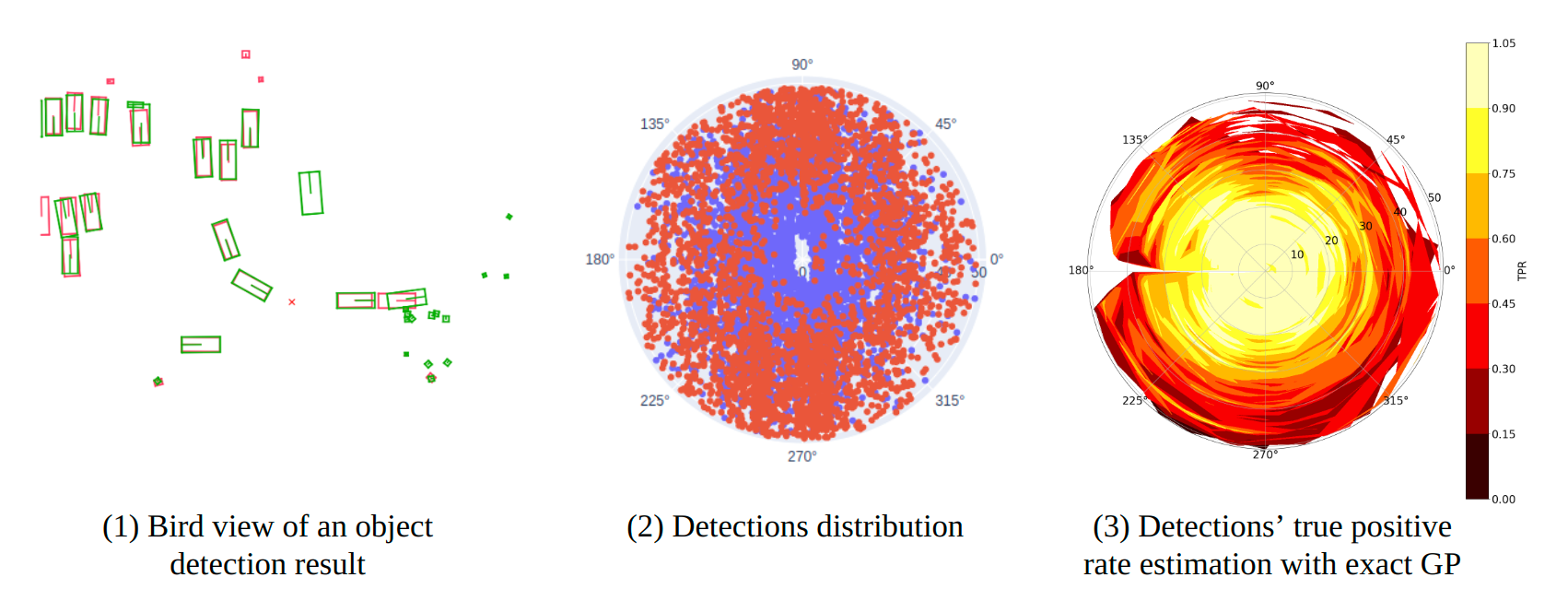

A notable example is the Hydraulically Amplified Self-Healing Electrostatic (HASEL) actuator, which relies on a liquid-based electroactive polymer. Through the application of high voltage (typically ranging from 5-10kV), the actuator's electrodes undergo displacement, compelling the liquid to shift its position. This unique mechanism empowers the actuator to actuate in a robot, like carrying a weight. The development of accurate models for this actuator assumes a crucial role in enabling precise control, given its soft, pliable characteristics and the presence of noise inherent in experimental data.

Presently, my research within the SRL focuses on the modeling of 3D HASEL actuators using industry-standard software tools like ANSYS and COMSOL. These models encompass three fundamental physical domains: electrostatics, solid mechanics, and fluid dynamics. The overarching goal is to harness this modeling data for the training of neural networks, ultimately facilitating the design and implementation of controllers designed for these HASEL actuators.

Research Overview

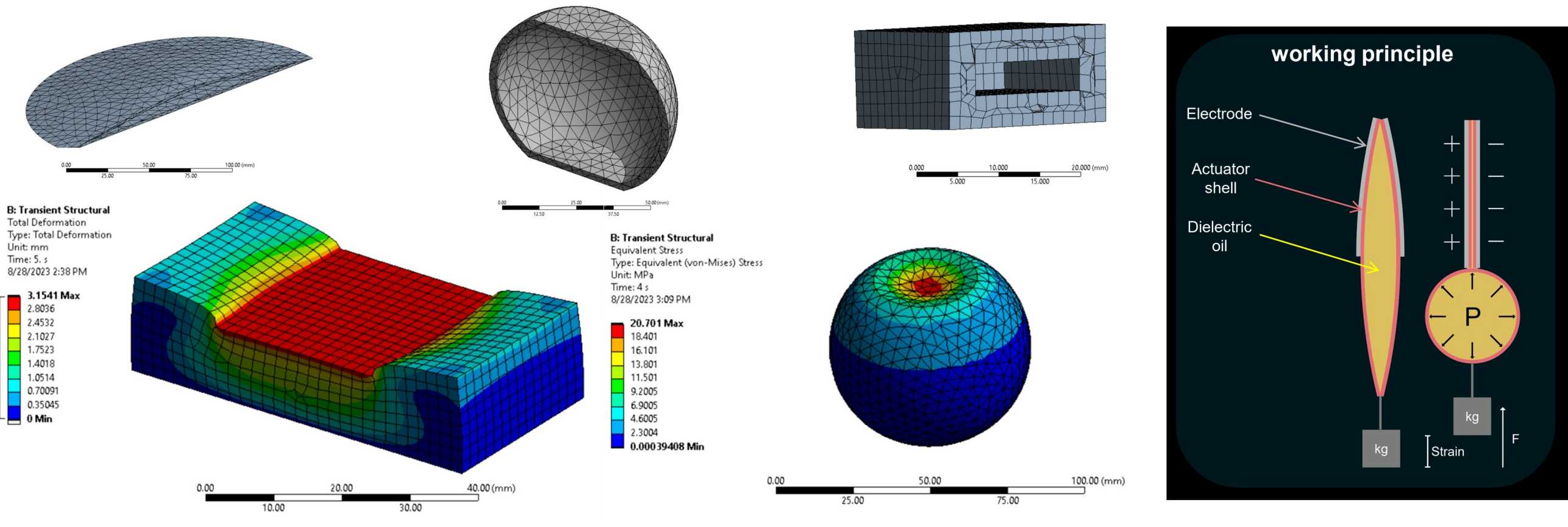

During the fellowship, my project focused on addressing the challenge of reliable state estimation and perception in visually degraded environments (VDEs) for autonomous robots. In scenarios like disaster response and subterranean exploration, where traditional sensing methods like visual and lidar are limited, the need for accurate pose estimation becomes crucial. Our project leverages data from the ColoRadar dataset and builds upon previous work by developing an end-to-end learned approach. We use a deep convolutional neural network (CNN) architecture to estimate the 6DoF pose change of a radar sensor between consecutive scans. This innovative approach presents the first method for achieving 6DoF alignment of 3D radar scans, showcasing its potential for robust performance across diverse environments and operating conditions. By harnessing the power of CNNs and advanced optimization techniques, our methodology contributes to the field of robotics by enabling accurate state estimation even when traditional methods falter in challenging visual conditions.

"I really enjoyed the opportunity to be part of the excellent Sensory-Motor Systems Lab, where I learned from an exceptional group of researchers and was able to put my knowledge into practice. Moreover, together with the great atmosphere of the city of Zurich, the great landscapes of Switzerland and the great environment of ETH, I affirm that it was an unforgettable experience that marked my life positively. I thank the organizers of this program and my mentors who guided me through this research experience."

Research Overview

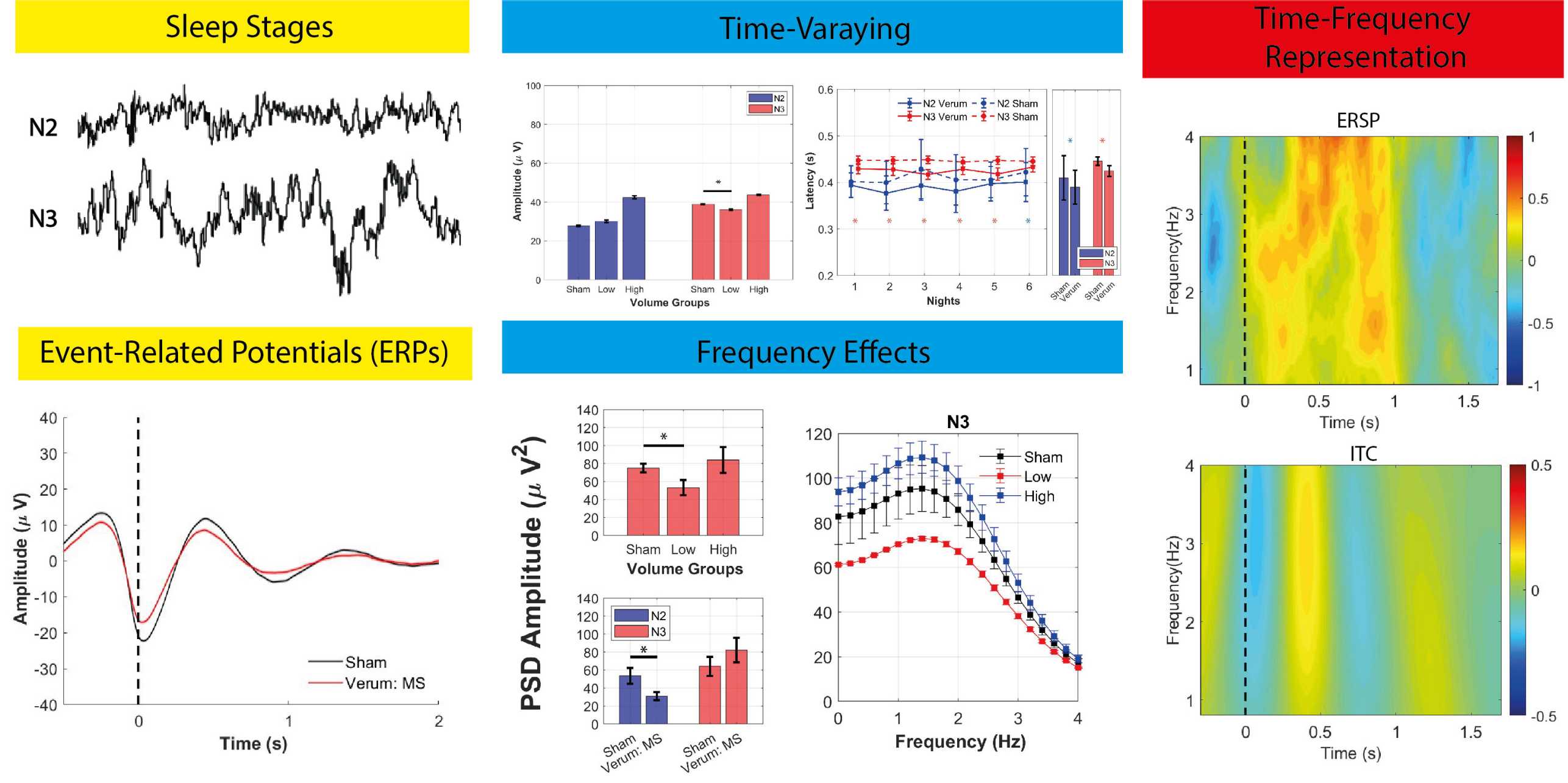

Electroencephalography (EEG) signals can be systematically modulated when external stimuli, such as auditory stimuli, are applied to an individual. Therefore, acoustical stimulation applied on Slow Wave Activity (SWA) during sleep has the potential to modulate wave characteristics. This study seeks to explore whether the application of acoustic stimuli during the down-phase of SWA results in a reduction of parameters such as amplitude, during the subsequent up-phase of SWA. The study also aims to identify specific volume groups that could potentially support this hypothesis. For this, a database of five healthy participants recorded across 12 nights each utilizing a wearable sleepband, was evaluated. Two experimental conditions were employed: stimulation with different volumes (Verum), and no stimulation (Sham), both for 6 consecutive nights. The evoked and induced responses of Event-Related Potentials (ERPs) generated by acoustical stimulation during sleep were evaluated. For this, analyses were performed to observe time-varying effects using amplitude and latency, frequency behavior using Power Spectral Density (PSD), and time-frequency effects using Event-Related Spectral Perturbations (ERSP) and Inter-Trial Coherence (ITC) in two sleep stages (N2 and N3). In addition, statisti cal analysis was performed using two-sample paired t-test to determine which volume groups (low or high) exhibit lower amplitude, latency, and power compared to Sham, to verify the hypothesis proposed. The results show that the low-volume Verum condition presents significantly lower values than Sham, satisfying the hypothesis. The findings of this study are relevant to clinical research for the creation of new therapies and treatments for sleep problems related to neurological and psychiatric disorders.

Research Overview

In the fellowship, I participated in designing and developing a lower limb exoskeleton for children with cerebral palsy. This exoskeleton contemplates two orthoses for the lower limbs manufactured entirely from 3D printing and a control system whose operation is based on inertial unit measurement sensors, whose angles are detected in the walk for the control of the engines. With this prototype, a correct knee extension is mainly generated during the stance phase in gait, allowing the patient to have support and stability, considering that people with this type of pathology usually present a crouched gait. This type of system has relevant advantages and applications in the rehabilitation field.

"My time at ETH Zurich was truly memorable, immersed in its dynamic atmosphere, stunning landscapes, and a community of exceptional individuals. Through the Robotics Student Fellowship, I connected with peers who share my enthusiasm for their fields and are at the forefront of advancing robotics. Delving into AI and robotics research during my summer break was an absolute blast!"

Research Overview

Sports like soccer are more than just physical activities; they are intricate showcases of the combination of human sensory perception and motor skills. This inherent complexity has sparked the scientific interest in not only capturing but also emulating these multifaceted behaviors within robots, thus enhancing their locomotion capabilities.

My time at the CRL has been truly enriching. I've been working on an exciting project aimed at replicating key behaviors of the DeepMind OP3 robots for soccer applications using Massive Parallel Deep Reinforcement Learning in simulation. Remarkably, Google's DeepMind has successfully trained these miniature humanoid robots to excel in football techniques, including tackling, scoring, and fast recovery from falls. Under the framework provided by the repository Legged Gym - RSL, I managed to teach these robots how to walk and kick a ball in different directions toward a goal on a soccer field based on RoboCup rules. Using Massive Parallelism allowed me to speed up the training process and fine-tune the rewards more effectively.

The next steps of this project involve bringing together complex skills into a single policy and making sure the proper real-to-sim transfer to hardware. These aspects remain active subjects under research in reinforcement learning and robotics fields.

"The opportunity RSF provided me to carry out research at ETH is something I'll never forget. Meeting fantastic folks from various backgrounds but all sharing a love for robotics was amazing. This experience really pushed my research journey forward! A big shoutout to my supervisors at the Robotic Systems Lab, fellow students, and the organizers of the program for making it all happen."

Research Overview

The ubiquitous integration of neural networks across diverse domains has raised an intriguing challenge: accommodating constraints, particularly pertinent in safety-critical scenarios. Such constraints can encompass varying complexities – convex, non-convex, fixed, or dynamic over time. Integrating these constraints seamlessly with neural networks to produce compliant outputs is a pivotal question.

In light of this, the recent work by Tordesillas et al. introduced RAYEN – an approach that enforces hard convex constraints on neural network outputs or latent variables [1]. However, RAYEN's offline phase, involving computationally-intensive interior point optimization for predetermined constraints, has limitations. Specifically, it struggles with accommodating non-fixed and non-convex constraints, a shortcoming in real-time applications.

In response, my research aims to extend RAYEN's capabilities, enabling it to dynamically handle non-fixed constraints, facilitating more versatile solutions to non-convex constraints through sequential convexification strategies. This augmentation empowers constrained neural networks for real-time deployment.

To realize this vision, I propose RAYEN++, an online adaptation of RAYEN. By embedding constraint processing and interior point solving within the forward pass, RAYEN++ capitalizes on differentiable optimization, allowing gradient backpropagation during training. Experimental results on optimization-based safety filter problems achieves remarkable proximity to globally optimal solutions and outperforms CVXPY solvers by 2-3x in speed. This innovation showcases the feasibility of enforcing complex constraints in neural networks, thus expanding their applicability in optimization and machine learning contexts.

[1] Jesus Tordesillas, Jonathan P. How, Marco Hutter: “RAYEN: Imposition of Hard Convex Constraints on Neural Networks”, 2023; arXiv:2307.08336.

Research Overview

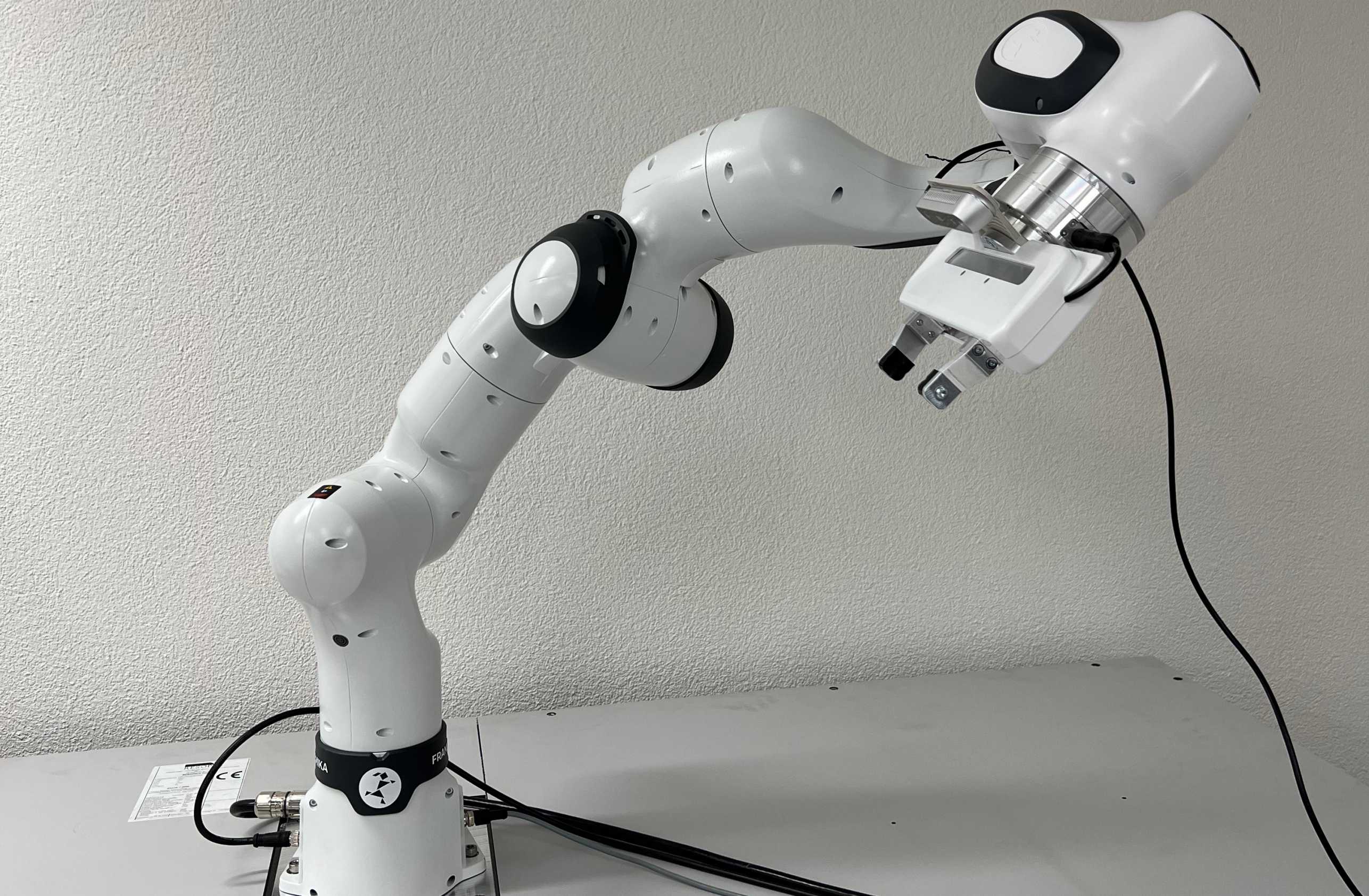

I was based in the Visual Intelligence and Systems Lab for the summer, where computer vision and machine learning techniques are being tested on real robots. I was tasked with building a software pipeline for grasp detection and execution. This would allow a robot to scan an object using a depth camera mounted on its end effector, and generate candidate grasp poses using deep learning. From here, the grasps are filtered by inverse kinematics and collision checking before executing the highest quality candidate grasp. There were various challenges with getting this complex system running on a real robot, and my programming abilities have grown significantly as a result. I am now working on adapting my pipeline for a smaller robot arm with a floating base, and the next step will be to mount this on top of a robot dog.

The opportunities for learning during this fellowship have been abundant and have profoundly enriched my understanding of robotics, computer vision and the world of research. I enjoyed the challenge of learning quickly and applying my knowledge in unfamiliar contexts.

I am really grateful to ETH and the programme administrators for hosting me, and to everyone at the Visual Intelligence and Systems Lab for their exceptional support and expertise.