RobotX Research Program

ETH Zurich has launched the RobotX Research Program together with ABB as its first partner to further expand research and education in the area of mobile robotics and manipulation. Additional partners are expected to join in the near future. Within this framework, a long-term research program is being established aiming at bringing together competences from academic and industrial research with the goal to make the robots conscious about their environment and capable to move around, make decisions and perform tasks in an autonomous manner.

The program is structured around annual calls over a period of five years (until more partners are found) that will support research projects from ETH RobotX Initiative research groups.

2023 Funded Projects

Teaching mobile robots long horizon tasks that involve locomotion and manipulation, such as moving to a table to pick up an object and delivering it to a target location, is challenging. We formulate this task as a reinforcement learning problem. We propose a framework that learns from a large set of uncurated demonstrations of the robot interacting with different environments and a few task-specific expert demonstrations. Our method consists of three modules. First we learn to extract meaningful behaviors from the uncurated demonstrations. Second, we introduce a teacher-student framework that learns to interact with the environment to solve the desired manipulation task. To guide the learning, the teacher rewards the student agent to act similarly to the expert demonstrations. However, when encountered with novel states, the student receives suggestions from the previously extracted behavioral prior. Our end goal is to demonstrate the desired task on a real robotic system, where we do not have access to privileged information as we do in simulation. Crucially, our proposed framework allows an easy integration of real world sensing capabilities that still leverages the benefits of easily accessible, privileged information in simulation. Due to the modularity of our framework, only the student agent’s sensors have to be adjusted, e.g., to an RGB-D camera, whereas the rest of the framework can still benefit from additional information during training. Therefore, our framework will be able to learn complex control tasks and transfer them to the real world, bringing learning-based mobile manipulation robots closer to deployment.

Principal Investigator

Prof. Otmar Hilliges

Prof. Stelian Coros

Start (duration)

01.10.2023 (18 months)

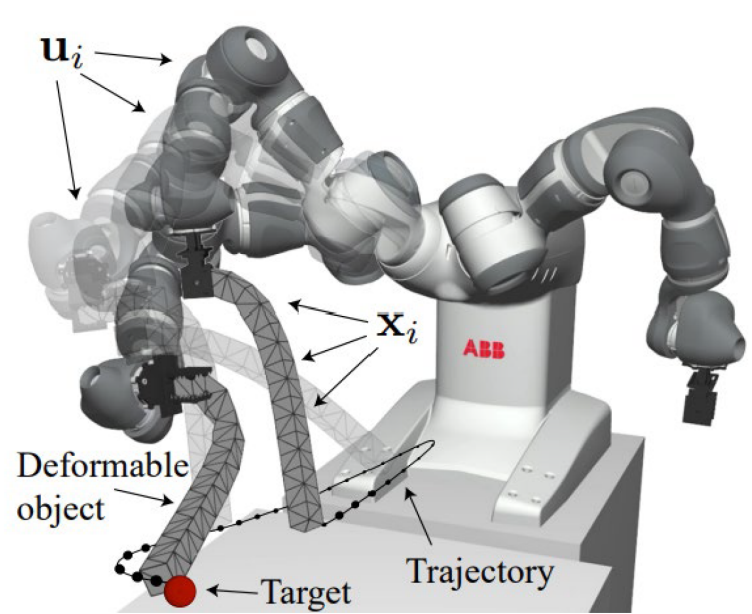

Many of the items we interact with in our daily lives are soft and squishy. While research on the manipulation of rigid objects by robots is advancing at a rapid pace, the situation is not quite so for deformable objects. The problem involves the development of advanced optimal control and learning methods that would allow robots to handle soft objects. The goal is to equip robots with human-like

abilities, such as folding clothes and passing soft parcels with precision, flexibility, and adaptability. Advancements in this field are expected to have far-reaching implications for various industries, including manufacturing, healthcare, and logistics, as well as contribute to the overall progress of the field of robotics.

With this proposal, we seek to focus on and investigate the decision-making process involved in activities such as folding clothes. For example, the robot must identify the type of cloth and its properties, such as its material, texture, and size. Based on this information, the robot must determine the best folding strategy, taking into account the cloth's tendency to stretch or wrinkle. Next, the robot must use its sensors to detect the position and orientation of the cloth, as well as any obstacles that may interfere with the folding process. The robot must then choose the appropriate movement, such as picking up, manipulating, and folding the cloth, using its actuators. The entire process requires the integration of perception, decision-making, and motor control systems, as well as the ability to adapt to changing conditions, such as unexpected obstacles or changes in the cloth's shape.

Principal Investigator

Prof. Stelian Coros

Start (duration)

01.07.2023 (18 months)

The ALLIES project aims to develop an intelligent legged robot that can understand the environment, perform complex sequences of mobile manipulation tasks, and assist people autonomously with only linguistic instruction as the interface. Such a technology could free people from tedious household chores and improve people’s quality of life, especially for those with motor impairments since it can greatly reduce their dependence on a caregiver.

The legged manipulator developed at RSL can already perform specific predefined tasks but falls short of reasoning about the environment and completing complex sequences of tasks. In this project, we aim to build on top of our initial investigations on semantic understanding and robotic planning and exploration to achieve a high autonomy of the robot allowing it to better comprehend and interact with its surroundings. Large language models (LLMs) will be used to understand verbal commands from a human operator and deconstruct them into a plan that the robot is able to execute. In addition, visual language models (VLMs) and open-vocabulary object detection methods will be used to develop a representation of the environment around the robot and provide it with the perception and localization capabilities it needs to execute the plan successfully.

Principal Investigator

Prof. Marco Hutter

Start (duration)

01.07.2023 (18 months)

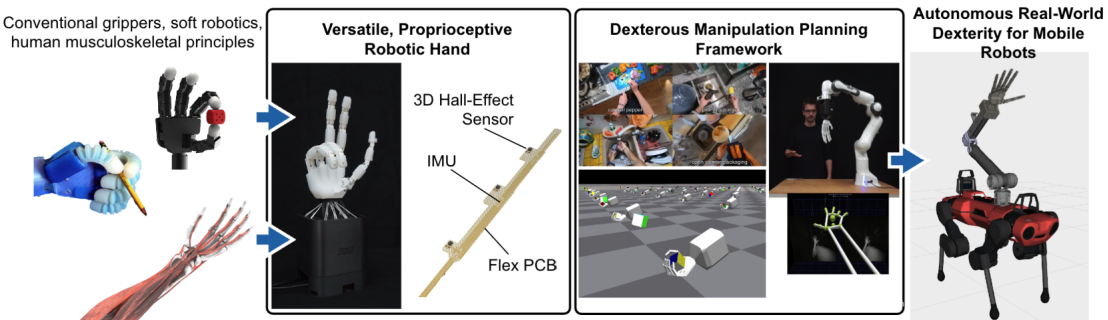

Mobile robots with mounted manipulators allow for automating daily tasks of increasing complexity in environments designed for humans. However, versatile manipulation with human-like dexterity and autonomy is still beyond current capabilities.

To develop human-like manipulation skills, we propose a system design for a versatile, cost-efficient, agile, and compliant robotic hand with accurate position and tactile sensing. This hand will be integrated with an existing robotic arm on an ANYmal quadrupedal robot to solve real-world challenges in assisting humans such as opening doors, pressing buttons, picking up objects from the floor, and performing robot-to-human handover tasks.

To solve complex manipulation tasks with this robotic hand we propose a data-driven, deep-learning-based dexterous grasp planning framework. By using available crowd-sourced point-of-view datasets (3670h video) of humans performing daily tasks for pre-training our robot transformer, we will reduce by an order of magnitude the need for time-intensive robot demonstrations for training.

We will be the first to equip quadrupedal robots with the abilities for versatile and shape invariant grasping using different grasp types, dexterous in-hand manipulation and re-orientation of objects, and grasp compliance for human interaction, which have previously not been possible with the twoand three-fingered robotic grippers used today.

This robotic hand and grasp planning framework will serve as a versatile platform for future research in machine learning for robotic manipulation and could significantly increase the capabilities of mobile robots deployed in unstructured environments. The technology will enable applications such as care and service robots, inspection robots, and rescue robots.

Principal Investigator

Prof. Robert Katzschmann

Start (duration)

01.07.2023 (18 months)

Novel aerial robotic systems, such as tilt-rotor platforms, have made meaningful in-flight physical interaction possible, enabling a wealth of novel applications in industrial inspection and maintenance. In contrast to regular drones, they use individually servo-actuated arms with propellers to perform thrust vectoring and generate forces in arbitrary directions.

The precise and robust control in flight is highly challenging and prone to modeling mismatches and unknown environmental factors. Classic control approaches applied to tilt-rotor platforms have reached limits in both precision and robustness, mainly due to complex non-linear dynamics and the lack of accurate models. While accurate propeller thrust models exist, the unknown dynamics and inaccuracies (e.g. backlash) of servomotors are a significant impediment to better flight performance. Additionally, in current approaches, the actuator allocation, which distributes desired forces and torques to the different actuators, is blind to the context of flight - e.g. the closeness to obstacles and airflow disturbances or precision/smoothness requirements.

We are convinced that control and modeling mismatches are the main impediments to robust and precise aerial manipulation. We propose to tackle these problems by i) applying data-driven modeling techniques for actuator identification, ii) integrating the resulting model in a comprehensive simulation environment, iii) and subsequently train novel context-dependent control and allocation policies.

This will enable the system to leverage the full actuation capabilities and redundancies of tilt-rotor platforms, with the ultimate aim to create a new generation of aerial workers, capable of accurate force generation for precise flight and physical interaction with the environment.

Principal Investigator

Prof. Roland Siegwart

Start (duration)

01.07.2023 (15 months)

2022 Funded Projects

The main objective of this project is to investigate the benefits and challenges of targeted 3D and semantic reconstruction and to develop quality-adaptive semantically guided Simultaneous Localization and Mapping (SLAM) algorithms. The goal is to make an agent (e.g., Boston Dynamics Spot robot) able to navigate and find a target object (or other semantics) in an unknown or partially known environment while reconstructing the scene in a quality-adaptive manner. We interpret being quality-adaptive as making the reconstruction accuracy and detailedness dependent on finding the target class – i.e., reconstruct only until we are certain that the observed object does not belong to the target class. We divide the project into three work packages (WPs).

First, we will develop a scene representation that is suitable for targeted quality-adaptive reconstruction. The representation has to accommodate 3D in different qualities and scene semantics in a hierarchical manner while allowing efficient exploration and map updates. Second, we will develop targeted SLAM algorithms that allow an agent to find the sought semantic such that both the path traveled and the reconstruction time is close to minimal. In the third WP, we aim at creating shorter loops between learning in simulation and testing in the real world. We want to update learned representations and navigation models with information from how the agent is performing in the real world, toward bridging the gap of successfully applying the agent in the latter. Moreover, this work package includes data collection and it runs in parallel with the other WPs.

Principal Investigator

Prof. Marc Pollefeys

Dr. Iro Armeni

Dr. Daniel Barath

Start (duration)

01.09.2022 (18 months)

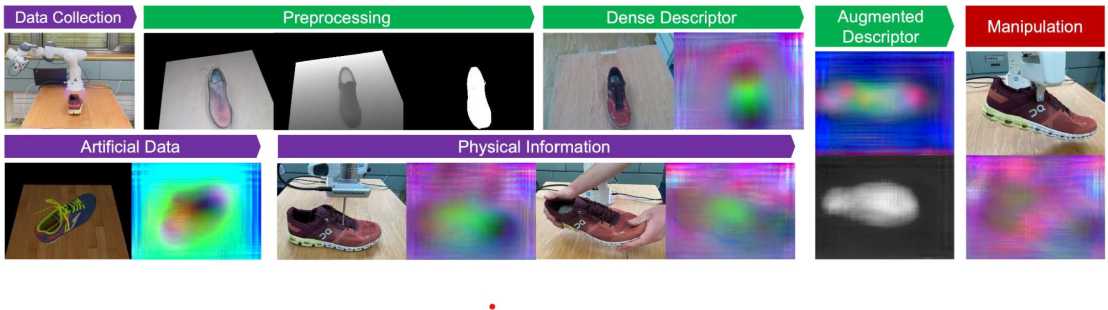

Combining robotic manipulation with computer vision allows for automating daily tasks of ever-increasing complexity. However, versatile manipulation of complex or deformable objects is still beyond current robotic capabilities: learning methods typically process data from a purely visual standpoint with end-to-end training, while humans intuitively associate visual and physical properties in their internal representation of the world.

To develop a similar internal representation, we will advance representation learning by encoding both visual semantics and intuitive physics through interactions with articulated objects. We combine recent advances in self-supervised learning, visual object representation, and robotic hand designs to encode and predict object interactions. We take and collect large-scale simulated datasets of deformable and articulated objects and enhance them with real-world non-visual interaction data (e.g., compliance, linkages, graspable areas) to train dense visual representations that are appropriate, universal representations of previously unseen objects for manipulation tasks. The resulting latent space combines pixel-wise geometric correspondences with deformation, damping, and inertia that can tell the rigidity of surface and connection points of objects with various deformability and articulability.

We expect our dense representation to improve downstream manipulation tasks, even for unseen objects that require generalization capabilities. Developing a universal representation of objects for manipulation will be an important step forward in combining computer vision, representation learning, and robotics. The anticipated improvements in the versatility and generality of manipulation will allow robots to be used in a range of high-impact applications, including medicine, logistics, food processing, and agriculture.

Principal Investigator

Prof. Robert Katzschmann

Prof. Fisher Yu

Start (duration)

01.12.2022 (18 months)