2022

The ETH Robotics Innovation Day will be held on July 1st 2022

The Arch_Tec_Lab building (HIB) at ETH Hönggerberg campus will host the 2022 ETH Robotics Innovation Day.

In this forum the latest advancements in robotics will be showcased aiming to spark new applications and disrupting technologies developed in a close collaboration between academia and industry.

We count with an amazing line of speakers representing the robotic labs at ETH Zürich. Please follow the program for a detailed schedule.

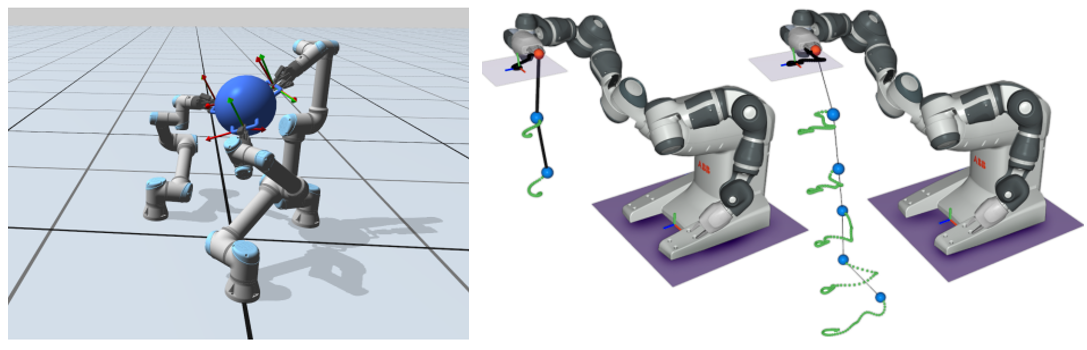

Computational Robotics Lab, Prof. Dr. Coros, Stelian

We formalize advanced simulation models to provide robots with an innate understanding of the laws of physics. Leveraging these models, we devise practical algorithms for motion planning, motion control and computational design problems. Whenever possible, we exploit data to efficiently learn solution spaces, to create faithful digital twins through real-to-sim methodologies, and to enable humans to teach robots new skills.

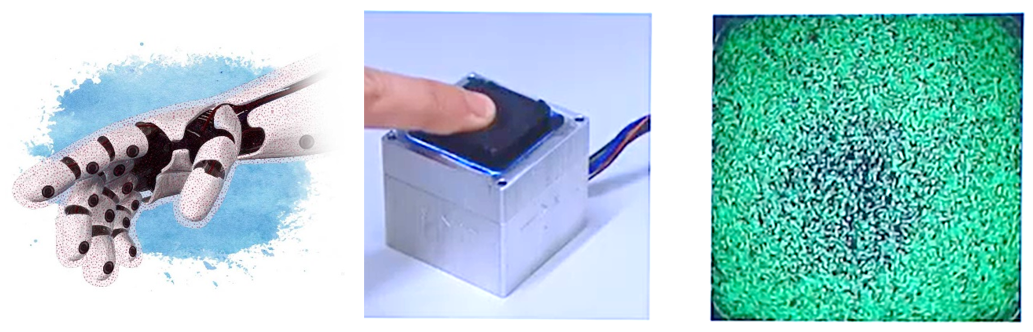

Institute for Dynamic Systems and Control, Prof. Dr. D'Andrea, Raffaello

The talk will describe the development of a soft, vision-based tactile sensor that fully exploits the high resolution of modern cameras, while at the same time offering ease of manufacture. In order to overcome the complexity of interpreting raw tactile data, simulation-based machine learning strategies are presented to map the tactile images to the distribution of (pressure and shear) forces applied to the sensor, which shows to facilitate downstream robotic tasks.

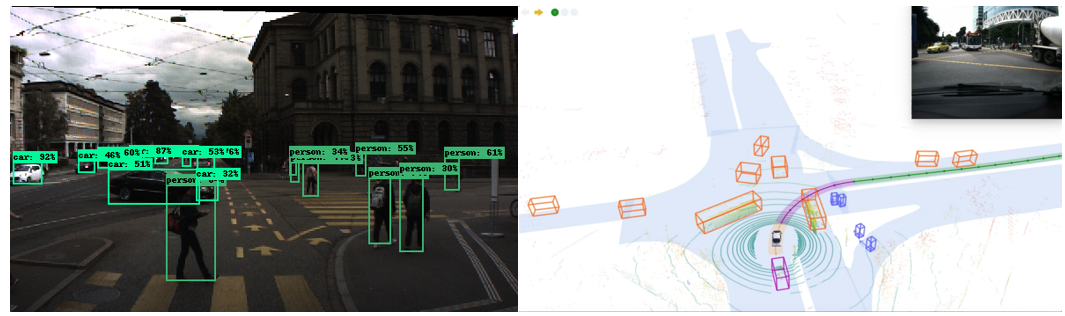

Institute for Dynamic Systems and Control, Prof. Dr. Frazzoli, Emilio

We investigate autonomous vehicles and mobility-on-demand that promise to revolutionize urban landscapes. The vision of autonomous driving builds upon developments in mathematical modeling, advanced motion control, distributed systems and machine learning. Bringing these innovations to life, we operate on a diverse set of autonomous vehicle testbeds.

Robotic Systems Lab, Prof. Dr. Hutter, Marco

Our goal is to develop machines and their intelligence to operate in rough and challenging environments. With a large focus on robots with arms and legs, the research includes novel actuation methods for advanced dynamic interaction, innovative designs for increased system mobility and versatility, and new control and optimization algorithms for locomotion and manipulation.

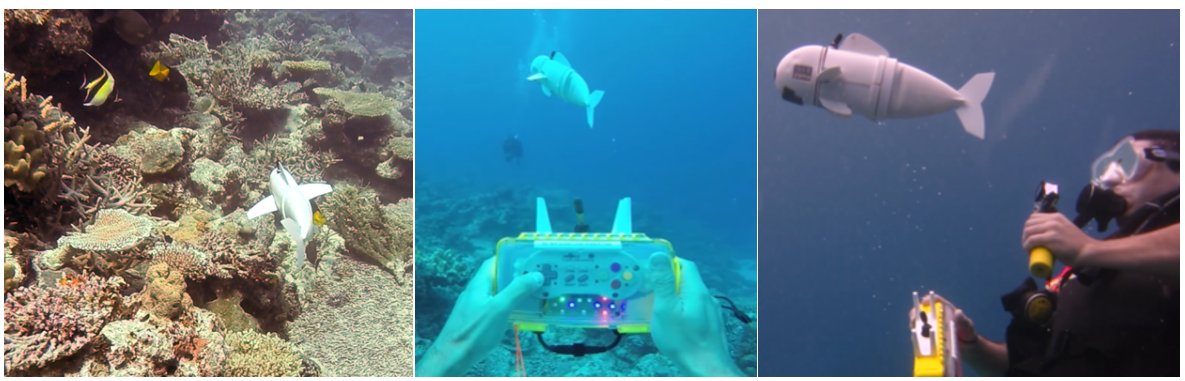

Soft Robotics Lab, Prof. Dr. Katzschmann, Robert

The Soft Robotics Lab (SRL) builds robots that safely and smoothly interact with nature. Our aquatic swimmers, walkers, grippers, and flying machines can locomote and adaptively manipulate objects under and above water because of the way we integrate functional materials with intelligent controllers.

Computer Vision and Geometry Group, Prof. Dr. Pollefeys, Marc

Our research and education focuses on computer vision with a particular focus on geometric aspects. We work on the problems of structure-from-motion, self-calibration, photometric calibration, camera networks, stereo and rectification, shape-from-silhouettes, multi-view matching, 3D modeling and image-based representations.

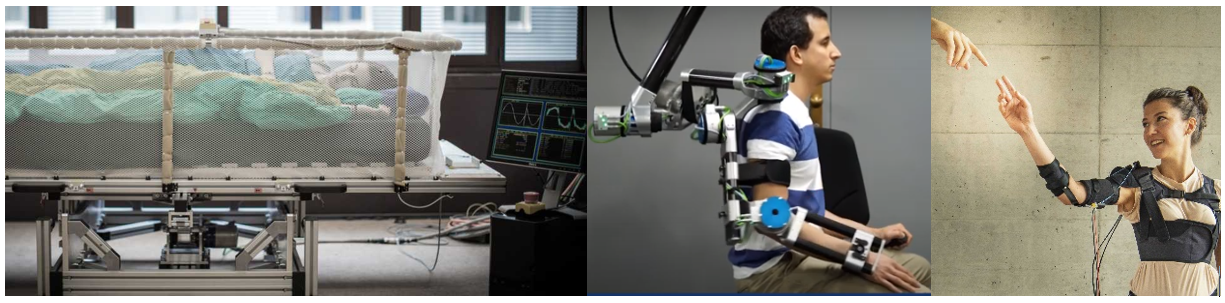

Sensory-Motor Systems Lab, Prof. Dr. Riener, Robert

The Sensory-Motor-Systems Lab works on the development and evaluation of robotic systems and therapy strategies for high efficiency robot-assisted therapy, devices for gait rehabilitation and mobility assistance, and among other projects on autonomous robotic platforms which provides rocking movements or postural changes to improve sleep quality.

Autonomous Systems Lab, Prof. Dr. Siegwart, Roland

We create robots and intelligent systems that are able to autonomously operate in complex and diverse environments. We are interested in the mechatronic design and control of systems that autonomously adapt to different situations and cope with our uncertain and dynamic daily environment.

Visual Intelligence and Systems Lab, Prof. Dr. Yu, Fisher

Our goal is to build perceptual robotic systems that can perform complex tasks in complex environments. Our research topics include visual representation learning, algorithms for 2D and 3D motion analysis, real-world delivery of perception models, human-machine collaboration for large-scale data exploration, visuomotor reinforcement learning for motion and manipulation, and robot interaction in dynamic environments.

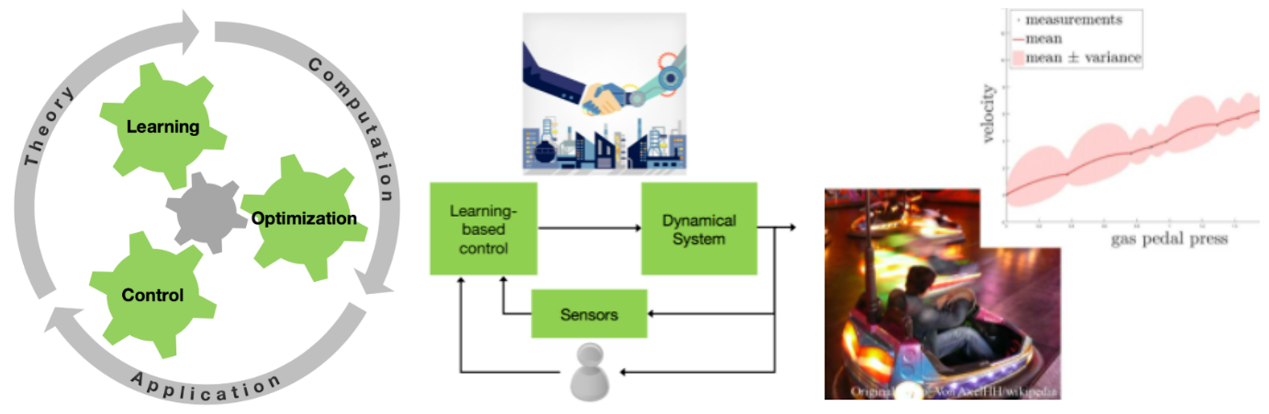

Intelligent Control Systems Group, Prof. Dr. Zeilinger, Melanie

When safety is critical, control systems are traditionally designed to act in isolated, clearly specified environments, or to be conservative against the unknown. The goal of research in Prof. Zeilinger's group it to make high performance control available for safety-critical systems that act in varying, uncertain environments, and which are potentially large-scale, composed of numerous interconnected subsystems, and, most importantly, involve human interaction.

Environmental Robotics Lab, Prof. Dr. Mintchev, Stefano

We research and develop robots that can access complex natural environments to collect data and samples at different spatial and temporal scales. From environmental monitoring to precision agriculture and disaster mitigation, our robots aim to provide concrete solutions to some of the United Nations Sustainable Development Goals.

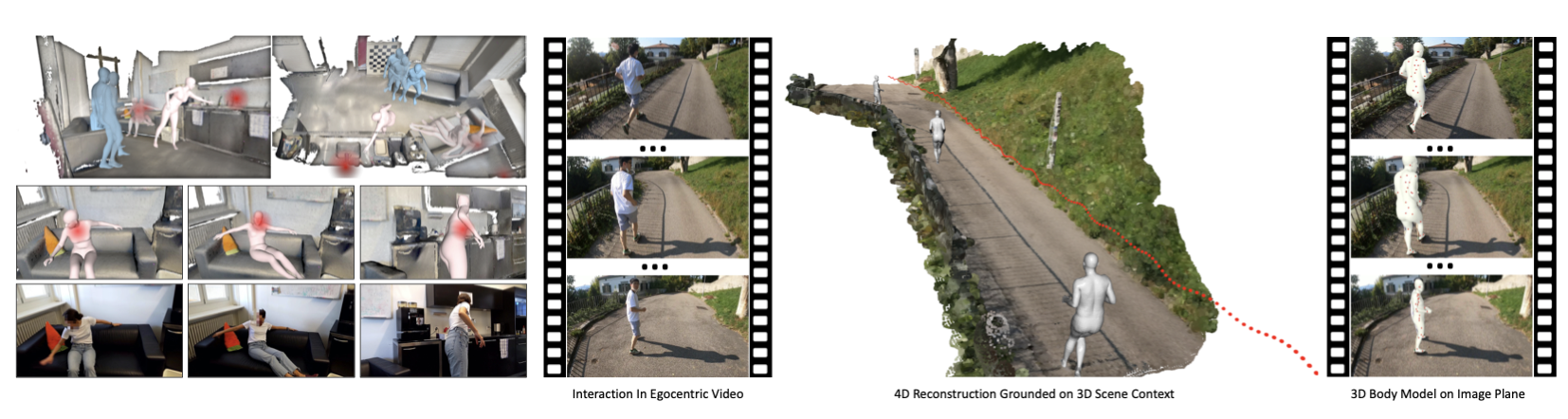

Computer Vision and Learning Group, Prof. Dr. Tang, Siyu

We work on discovering and proposing algorithms and implementations for solving high-level visual recognition problems. The goal is to advance the frontier of robust machine perception in real-world settings.

Our research interests include:

- Human motion capture and modeling.

- Human action and behavior understanding

- Human body and hand modeling.

- Human avatar creation.

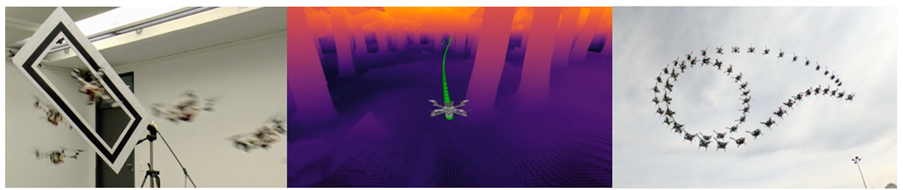

Robotics and Perception Group, Prof. Dr. Scaramuzza, Davide

Our goal is to develop perception, learning, and control algorithms that will allow autonomous drones to outperform human pilots in agility and robustness. Recently, we showed that neural networks trained in simulation and deployed on real drones allow them to achieve maneuverability and speed similar to those controlled manually by human pilots. We do research on both standard cameras and neuromorphic event-based cameras. Our work has been featured in the New York Times, The Economist, Forbes. We have collaborations with the UN, NASA-JPL, Intel, SONY, Huawei.