Research Fellows

"I would like to thank the ETH Robotics Fellowship for giving me the opportunity to work in one of the best universities to do robotics in the world. This fellowship is specially unique because it embraces the multidisciplinary nature of robotics and allow researchers to cross the boundaries of our field to make true innovation."

Research overview

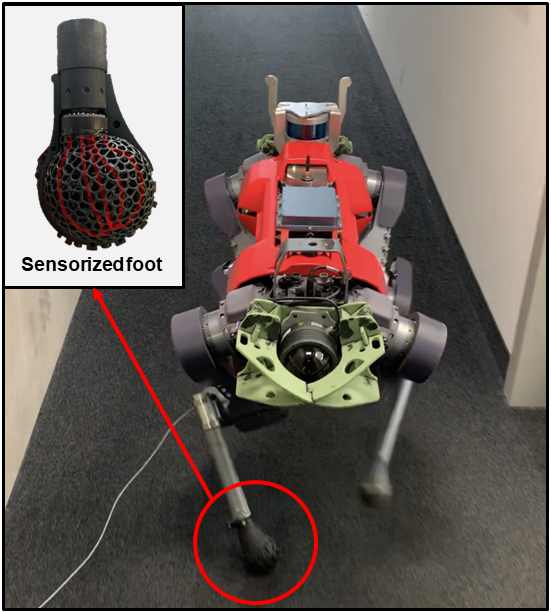

The sense of haptics —often called touch, allows animals to feel the physical interactions of their body with the environment. The stream of sensory information includes the perception of temperature, contact, force, vibration, among others. In legged robots, haptic information caused by the mechanical interactions of bodies (e.g., forces, shear, slippage) is especially important for the controls of the system and promise to give robots the ability to locomote on difficult terrains such as soft and uneven ground (e.g., mulch, leaves, sand, mud). During the ETH RobotX fellowship at the Robotic Systems Lab, Jose explored the opportunities of haptic sensing in the feet of ANYmal (a quadruped robot) to improve locomotion. He first investigated how rich haptic information coming from the feet of the robot could benefit the training of a locomotion policy in a reinforcement learning framework by comparing haptics-aware policies with those from standard sensing modalities (e.g., vision). In collaboration with the Organic Robotics Lab at Cornell University, he presented the design and fabrication of a robust, sensorized foot based on his Ph.D. work on optoelectronic elastomeric skins for haptic sensing. When integrated with the robot, such a foot can estimate the contact state and the magnitude and direction of the reaction force. This exploratory study aimed to bring haptics closer to reality for legged robots by showing a commercially feasible sensor and the benefits of using haptic information in the robot controls.

Jose Barreiros received his Ph.D. (2021) and MSc. (2020) in Systems Science at Cornell University with focus on robotics. His research lies in the intersection of soft and traditional robotics, machine learning, and haptics. His mission is to push the boundaries of artificial touch from the learning and hardware perspective to achieve ubiquitous human-like haptic perception for embodied AI agents. He has worked at Facebook Reality Labs and TieSet -a seed-stage startup working in privacy-preserving AI. In 2021, he continued his education as a research fellow at ETH Zurich, working at the Robotic Systems Lab. Previously, he studied Systems Engineering (MEng; Cornell, 2017), Entrepreneurship (MIT Sloan School of Management, 2016), and Electronics and Controls (BSc.; Politécnica Nacional del Ecuador, 2015).

Research overview

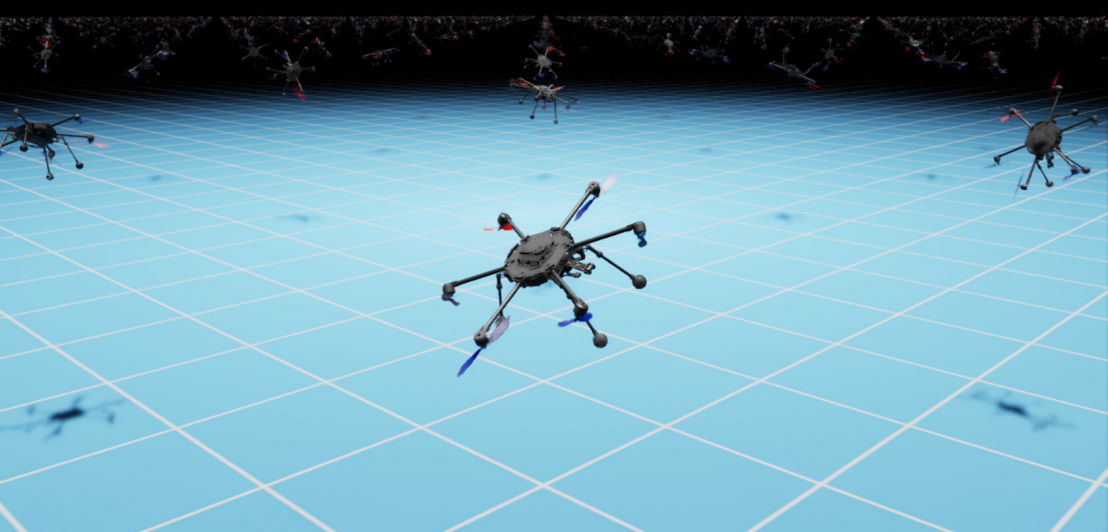

Aerial vehicles have a broad range of applications, including construction, agriculture, and utilities. In these industries, quadrotors have been a popular choice for inspection tasks due to their simplicity. However, quadrotors are underactuated, which imposes significant constraints on their feasible motions (e.g. hovering in place while tilted sideways is not possible). In contrast, overactuated aerial vehicles offer the potential to eliminate these constraints, making them valuable for inspection tasks requiring multiple perspectives or contact-based applications requiring orienting of mounted end-effectors.

The Autonomous Systems Lab (ASL) at ETH Zurich has been developing omnidirectional micro aerial vehicles (OMAVs) over the last several years, and their current platform features six rotors mounted on tiltable arms. With the introduction of overactuation, there are open questions about the optimal allocation of actuation across the rotors and tilt-arms. The objective of this work is twofold: first, to analyze the current physics-based allocation method, and second, to investigate the integration of learning-based methods to enhance the performance of OMAVs. Through this work, we aim to advance our understanding of overactuated aerial vehicles and create a foundation for future work in learned allocation strategies.

Rianna Jitosho is a PhD candidate in the Department of Mechanical Engineering at Stanford University, advised by Allison Okamura and Karen Liu. Her research interests include planning and controls, soft robotics, and mobile systems. Her current work focuses on leveraging reinforcement learning to enable autonomous agile maneuvers for soft robot arms. Outside of her academic pursuits, Rianna has gained industry experience at organizations including Jet Propulsion Laboratory and Honda Research Institute.

Ruyi Zhou, PhD candidate, State Key Laboratory of Robotics and Systems, Harbin Institute of Technology

Her research lies at the intersection of Robotics, terramechanics, and computer vision, specifically, focusing on autonomous, agile navigation, and scientific exploration for space robots. Her current research topic is integrating environmental physical property perception into robot’s locomotion and navigation to handle slippery and soft deformable terrains.

Research overview

Navigation in wild or planetary environments presents unique challenges for robotic systems beyond geometric obstacles. Non-geometric hazards, such as slippery surfaces and mud puddles, pose great risk for robots in slipping, excessive foot sinkage, or self-trapping. While recent learning-based traversability models excel in adapting to various environments, they are limited to addressing geometric obstacles, incapable to non-geometric hazards. To this end, my research project at RSL aims to develop a traversability model for legged robots that incorporates both geometric and physical properties of the environment, enhancing local navigation capabilities through simulation-based learning and deployment in path planning workflows.

To realize this vision, I featured the simulation platform, Isaac Gym, with more variety of contact models reflecting different physical contact effects on legged robot locomotion, such as the contact with soft deformable terrain and vegetation. By leveraging the parallel simulation and randomization, thousands of traversal experiences on terrains with different geometric and physical properties are collected. A light-weighted traversability model is trained with collected locomotion experience to predict the risk and cost of traverses. Furthermore, the traversability model is integrated into a local planner to plan hazard-free paths with optimized efficiency. Real-world path planning experiments with ANYmal are carried out in different physical settings to demonstrate the proposed method outperforms existing traversability models in avoiding geometrically-safe but physically-risky regions, and has better energy efficiency on safe regions.

Research overview

The focus of my PhD research revolves around the application of computer vision techniques in forested environments, with a primary objective of promoting a more sustainable utilization of forest natural resources. In this context, precision forestry is a core concept that drives my research. For instance, I have spearheaded the creation of an extensive synthetic dataset containing annotated forest imagery aimed at facilitating tree detection and diameter estimation. This dataset, which is available to the research community, can serve purposes towards forest inventory monitoring and applications involving selective tree harvesting.

Another significant aspect of my research involves the implementation of computer vision models to identify and categorize various tree types. This effort is in partnership with an electricity provider company to develop a method for species-specific inventory and growth monitoring of trees along electric transmission lines.

Lastly, I am actively exploring computer vision-based solutions for effective navigation within forested areas. These solutions encompass the detection of trees, logs, and trails, all of which contribute to the development of autonomous forest navigation systems.

My name is Vincent, and I am a doctoral candidate at Université Laval in the Northern RoboticsLaboratory under the supervision of Professor Philippe Giguère and François Pomerleau. I graduated with a master’s degree in Computer Science in 2020 from Université Laval, a bachelor’s degree in Electrical Engineering in 2018, and a Physics certificate in 2014 from Université de Sherbrooke.